Integrating Runtime Testing into Autonomic Systems

Motivation

Autonomic computing (AC) has led to an increase in the development of software systems that can configure, optimize, heal, and protect themselves. Such systems are generally characterized by dynamic adaptation and updating, in which the software responds to changing conditions by adding, removing, and replacing its own components at runtime. This capability raises quality concerns since faults may be introduced into the system as a result of self-adaptation. Runtime validation and verification (V&V) is therefore expected to play a key role in the deployment of autonomic software. However, IBM's architectural blueprint for AC does not describe runtime testing support in autonomic software, and few researchers have addressed this subject in the literature.

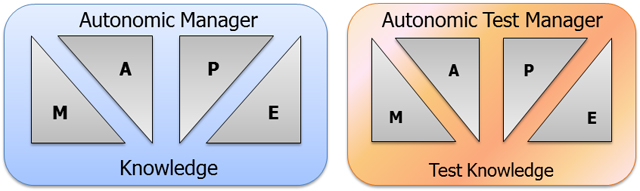

ApproachWe introduce an implicit self-test characteristic into autonomic software. Autonomic Self-Testing (AST) tailors the existing self-management infrastructure for runtime testing activities such as test execution, code coverage analysis, and post-test evaluation. Autonomic managers are specialized for runtime testing using a policy-driven technique and linked to an interface of automated testing tools. Since autonomic test managers (TMs) are designed, developed, accessed, and controlled in the same manner as traditional autonomic managers, they can be seamlessly integrated into the workflow of autonomic systems. Furthermore, like autonomic managers, TMs are highly configurable and extensible, thereby allowing the self-testing process and infrastructure to adapt as the software undergoes structural and behavioral changes. Reusing the self-management infrastructure for testing may also lead to a scalable runtime testing process because validation can be performed on-demand.

Scope and ChallengesThis project focuses on formulating new workflows, validation strategies, designs, algorithms, and programming techniques to support the integration of runtime testing activities into autonomic software. Some of the major research challenges of this work include: (1) selecting and scheduling regression tests to run after dynamic software adaptation or updating; (2) generating new test cases and removing existing tests that may no longer be applicable due to changes in program structure; (3) optimizing the performance of the runtime testing engine to avoid degradation of the processing, memory, and timing characteristics of the software beyond acceptable limits; (4) identifying an appropriate set of testing and test management tools to support AST; and (5) locating, developing, or simulating realistic autonomic software applications for the purpose of evaluating the approach.

Framework for Autonomic Self-Test

Developing a Reusable Self-Test Infrastructure for AC Systems

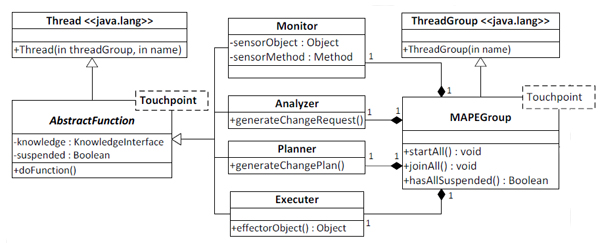

In this sub-project, we design, construct, and evaluate a software framework to support rapid development of self-testable autonomic systems. The framework was originally developed in Java using a combination of generics, reflection, and synchronized threads. However, we are currently working on porting the framework to .NET so that it can be use for testing cloud applications built on the Microsoft Windows Azure platform.

Current Students:

- Annaji Ganti, PhD Student

- Alain E. Ramirez, Java Developer, TracFone Wireless Inc.

- Rodolfo Cruz, Project Manager, Oceanwide Inc.

- Bárbara Morales-Quiñones, Undergraduate Student, University of Puerto Rico-Mayagüez.

Test Information Propagation

Synchronizing Test Cases after Dynamic Adaptation

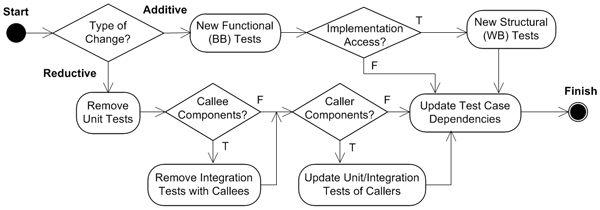

In this sub-project, we investigate a model-driven approach to map adaptive changes in autonomic software to updates for the runtime test model after adaptation. Our approach seeks to automate the steps that should be taken to update the test model of the autonomic system after new components have been introduced (additive changes), or existing components have been removed (reductive changes).

Current Students:

- Mohammed Akour, PhD Student

- Akanksha Jaidev, MS Student

Dynamic Test Scheduling

Runtime Ordering and Execution of Regression Tests

In this sub-project, we investigate the use of dynamic test scheduling approaches in autonomic software. This involves: selecting a subset of tests for validating an adaptive change to the system based on existing regression testing strategies; ordering selected tests for execution in a manner that seeks to maximize one or more predefined testing goals; and revising the established test schedule in response to real-time changes.

Current Students:- Asha Yadav, MS Student

- Arti Katiyar, MS Student